The United States is often called the home of the best doctors, the best hospitals, and the most advanced medical technology. And in many ways, that’s true. Some of the world’s top medical professionals and groundbreaking treatments are based in America.

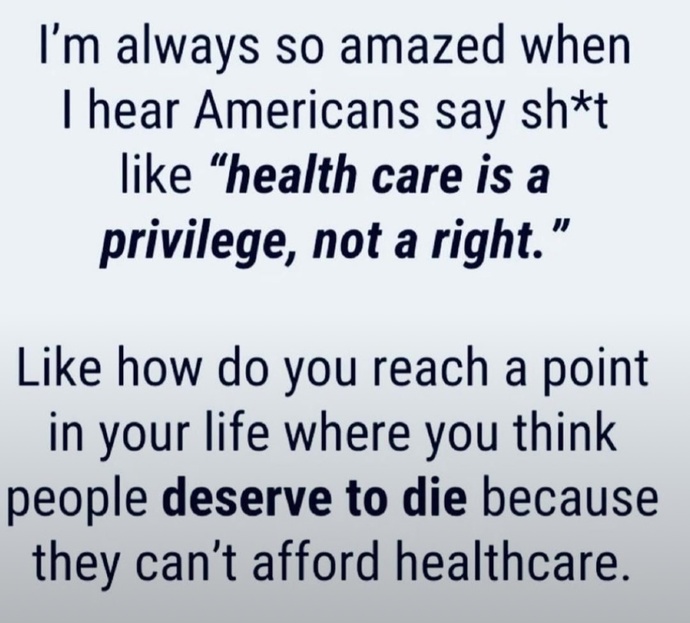

But here’s the problem. What good is having the best doctors if ordinary people can’t even afford to see them? Healthcare in the U. S. has become so expensive that millions of citizens either avoid treatment altogether, or drown in debt just to get basic care. That doesn’t sound like a system designed to protect people’s health, it sounds like a system designed to protect profits.

So here’s my question: should America loosen its strict capitalism when it comes to healthcare? Should doctors and the government be willing to make sacrifices so that access to healthcare isn’t a privilege for the wealthy, but a right for everyone?

Europe, for example, has managed to build systems where people don’t go bankrupt just because they got sick. If countries with fewer resources can do it, why can’t the richest country in the world? Maybe it’s time for the U. S. to rethink its priorities and move a little closer to a model that actually puts people first.

What do you all think? Is the American system broken beyond repair, or is it exactly what people want?

Holidays

Holidays  Girl's Behavior

Girl's Behavior  Guy's Behavior

Guy's Behavior  Flirting

Flirting  Dating

Dating  Relationships

Relationships  Fashion & Beauty

Fashion & Beauty  Health & Fitness

Health & Fitness  Marriage & Weddings

Marriage & Weddings  Shopping & Gifts

Shopping & Gifts  Technology & Internet

Technology & Internet  Break Up & Divorce

Break Up & Divorce  Education & Career

Education & Career  Entertainment & Arts

Entertainment & Arts  Family & Friends

Family & Friends  Food & Beverage

Food & Beverage  Hobbies & Leisure

Hobbies & Leisure  Other

Other  Religion & Spirituality

Religion & Spirituality  Society & Politics

Society & Politics  Sports

Sports  Travel

Travel  Trending & News

Trending & News